A seamless, drop-in Elasticsearch replacement that keeps your Logstash and Kibana workflow intact

For years, the ELK Stack (Elasticsearch, Logstash, Kibana) has been popular for log analytics and observability. Its ease of use, powerful full-text search, and rich ecosystem make it a good choice for developers worldwide.

However, as your data scales, the "E" in ELK often becomes a bottleneck. The "Log Explosion" reality means that storing and indexing petabytes of logs in Elasticsearch generates massive infrastructure bills. You are often forced into a difficult trade-off: keep paying exorbitant storage and CPU costs, or aggressively sample data and shorten retention periods, sacrificing visibility.

What if you don't have to make this trade-off or go through painful migrations? Today, we are going to discuss how to swap out the expensive storage engine for something faster and 80% cheaper without all the migration headaches.

Welcome to the VLK Stack: VeloDB, Logstash, and Kibana. Let's see a demo video first.

Why is Elasticsearch often the bottleneck?

Elasticsearch is excellent, but its architecture is resource-intensive at scale.

- Storage Bloat: Traditional inverted indexes and row-oriented storage in Lucene require significant disk space.

- Compute Overhead: Parsing JSON and maintaining a massive heap memory for indexing consumes substantial CPU and RAM.

- The Trade-off: To manage costs, teams often delete logs after 7 days or move them into "Cold Storage" (like S3), making them practically unsearchable when you need to debug a critical production incident.

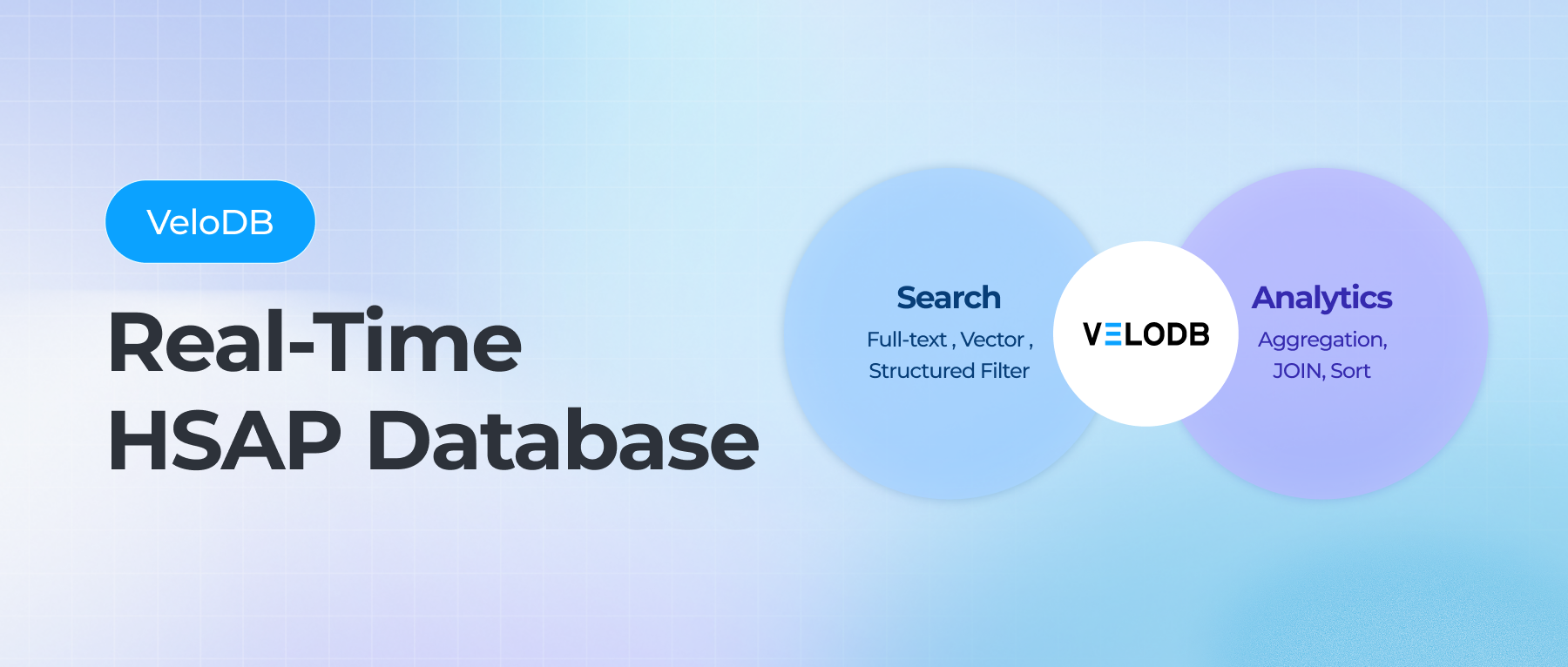

How VeloDB addresses the cost efficiency issue without sacrificing performance or features through the following:

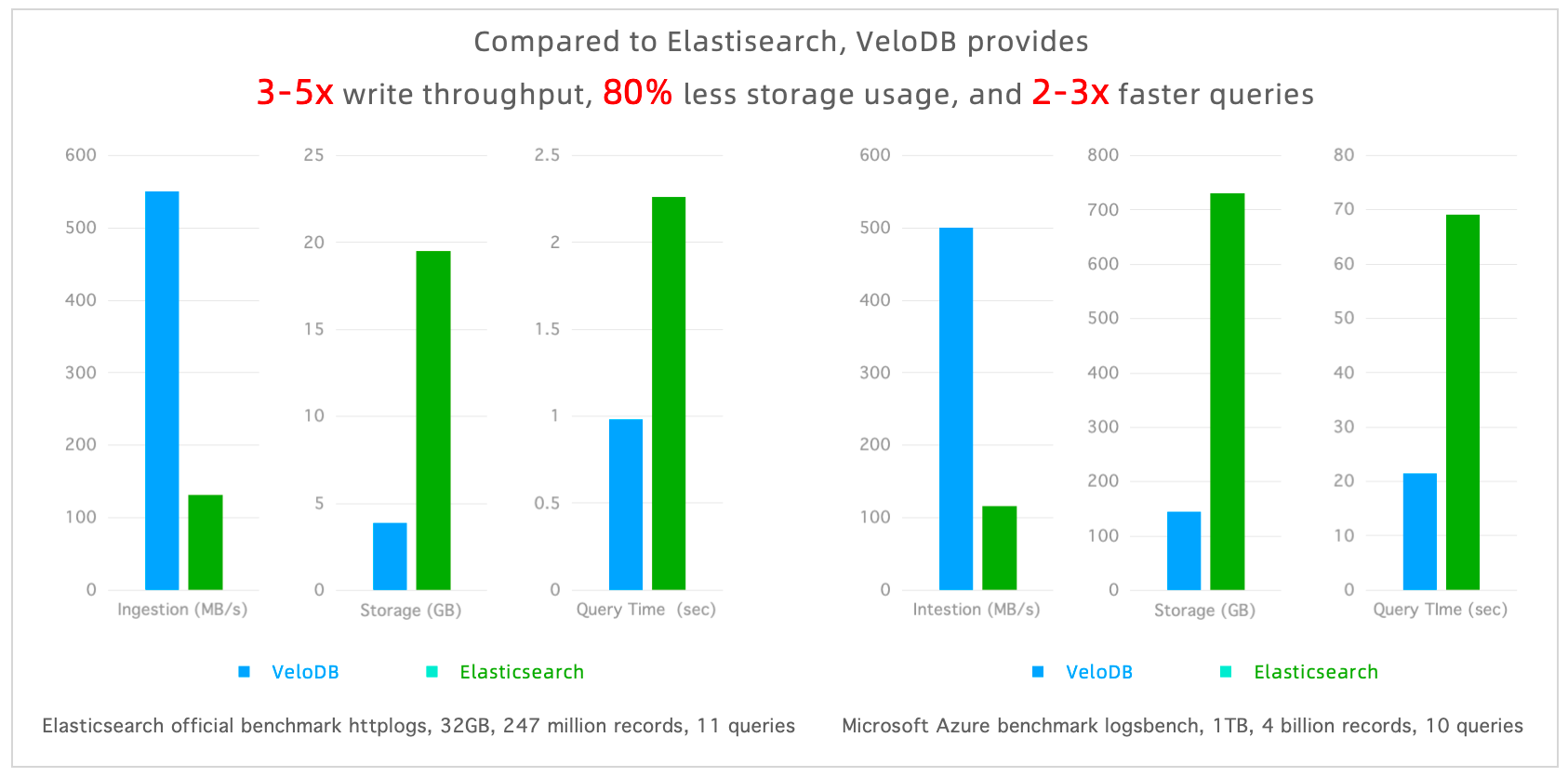

- CPU and Disk Storage Reduction: VeloDB leverages columnar storage, optimized index structure, and advanced compression, drastically reducing hardware requirements, providing up to 80% savings from reduced CPU cycles and disk volume.

- Inverted Index Support: VeloDB can also maintain your search SLAs, as it supports inverted indexes and full-text search like ElasticSearch.

- Faster search and aggregation queries: VeloDB was designed for real-time analytics, which means it supports a wide range of aggregations, often used in observability. And for search queries, VeloDB implements an inverted index in a way that's optimized for log search and optimized for the

topnsearch queries likeSELECT * FROM t WHERE message MATCH 'error' ORDER BY time DESC LIMIT 100. The result is that VeloDB is 2x faster for search queries and 10x faster for aggregation queries.

How can users adopt the solution and avoid all the migration headaches?

The Solution: VeloDB's Seamless Compatibility with Logstash and Kibana

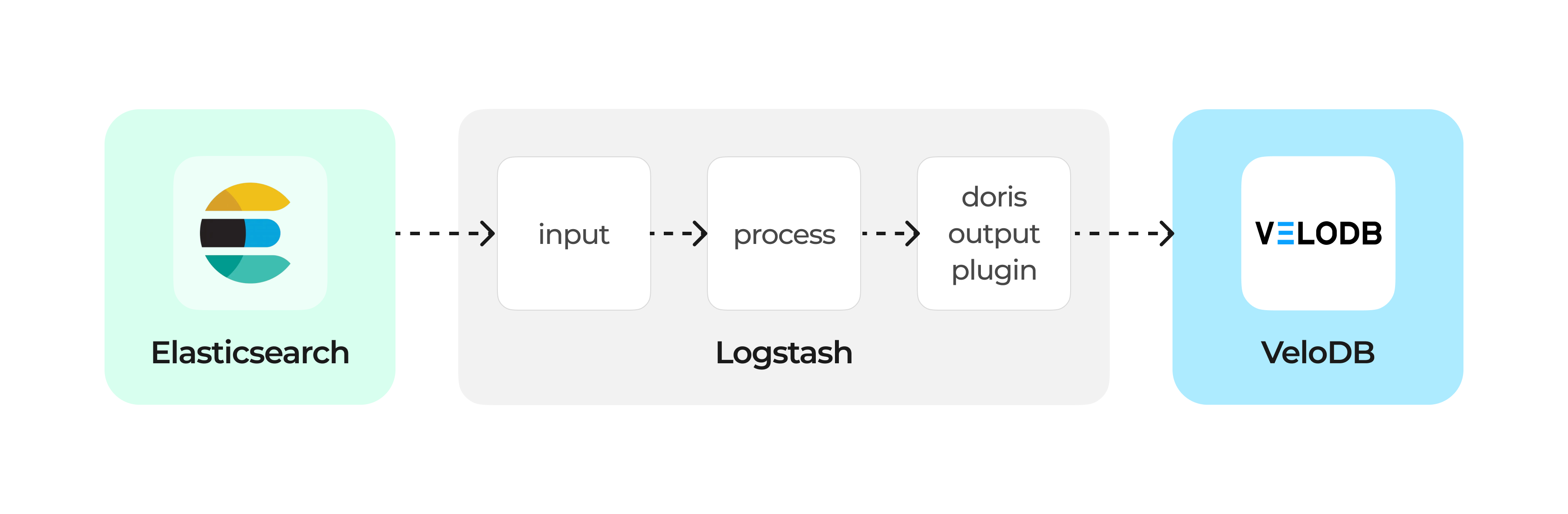

To support the integration, VeloDB developed a dedicated Logstash Doris Output Plugin that writes logs directly into VeloDB with the same ingestion pipeline structure and an es2doris proxy that makes Kibana think it's talking to Elasticsearch and then its dashboards and Discover UI work without modification.

How it works:

1.Logstash Compatibility

You don't need to setup a new log ingestion and ETL pipeline. VeloDB supports the standard logstash-output-doris plugin. It utilizes Stream Load to batch write logs into VeloDB transactionally and efficiently.

- Simply update the output part of your Logstash config

- No changes to filters, parsing, or pipelines

- No new ingestion tool required

Your ingestion workflow remains fully intact.

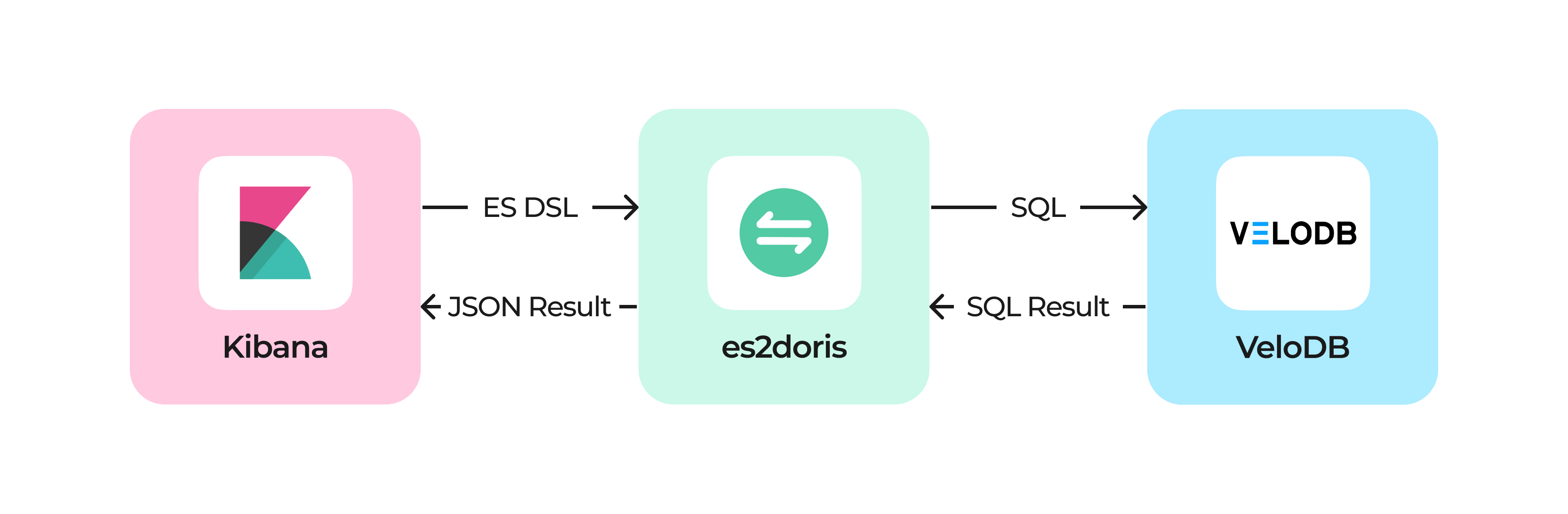

2.Kibana Compatibility

This is the bridge that makes the migration "drop-in." Kibana doesn’t know it’s talking to VeloDB — thanks to es2doris, a lightweight proxy layer. Kibana think it's talking to Elasticsearch and its dashboards and Discover UI work without modification.

The proxy:

- Accepts Elasticsearch APIs and parses DSL

- Converts queries to VeloDB SQL

- Returns results in Elasticsearch-compatible JSON

This preserves:

- Discover

- Dashboard

- Saved searches

- Alerts

With zero dashboard changes.

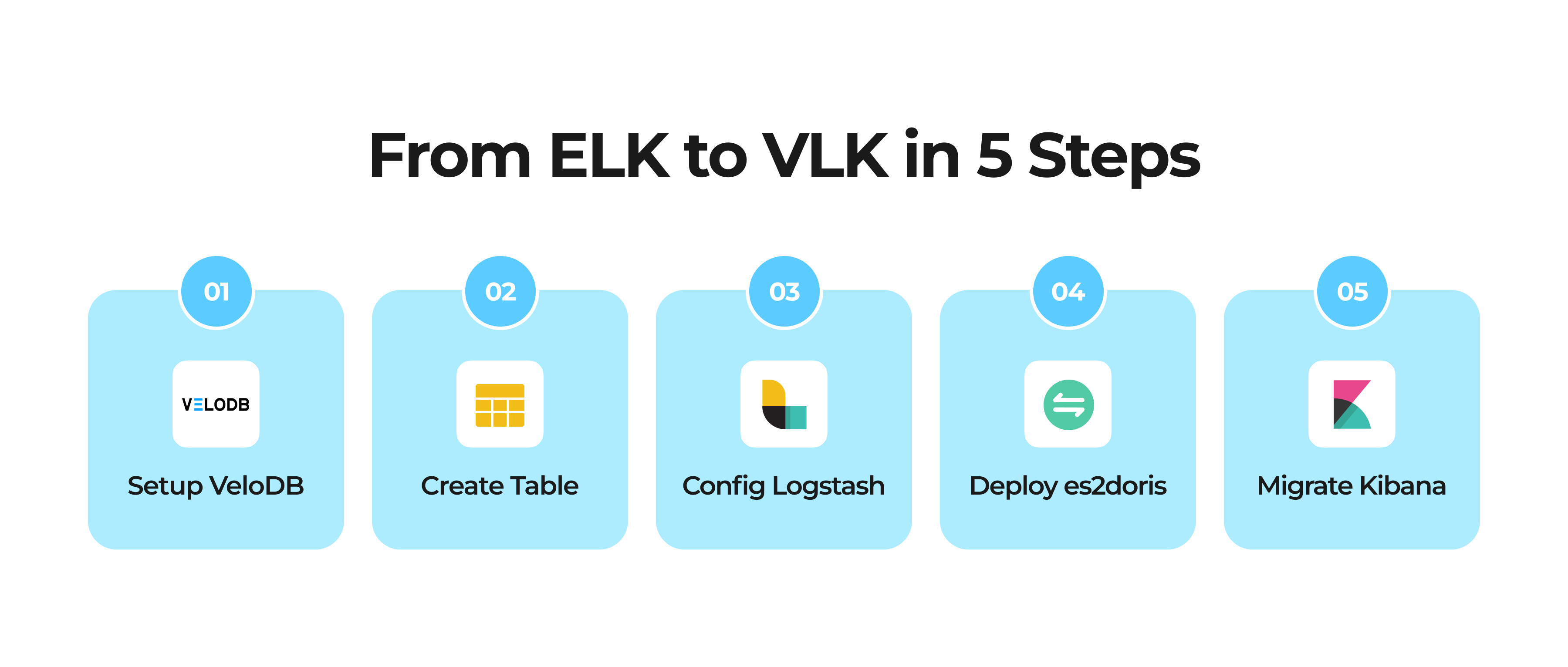

Migration Guide: From ELK to VLK in 5 Steps

Ready to cut your observability bill? Here is the path to migration.

Step 1: Setup VeloDB

Create a free account and a cluster on VeloDB Cloud. It’s fully managed and ready for production workloads.

Step 2: Create Table

Map your Elasticsearch Index Mapping to a VeloDB Table.

- Tip: Use VeloDB’s

VARIANTtype or dynamic schema features if your JSON logs have evolving fields. Enable Inverted Indexes on text columns for fast keyword search.

Step 3: Config Logstash

Update your logstash.conf to point to VeloDB using the output plugin. This handles the migration of live data.

Step 4: Deploy es2doris

Join the waiting list to get the es2doris proxy software.

Step 5: Migrate Kibana

- In your existing Kibana, go to Stack Management -> Saved Objects and export your Dashboards, Visualizations, and Index Patterns to a JSON file.

- Deploy a new Kibanan and config the Elasticsearch address to the

es2dorisaddress. - In your new Kibana, import the JSON file you exported.

Done! You can now open Kibana's Discover tab to search logs or view your Dashboards. The experience is identical, but the backend is now powered by VeloDB, which reduces 80% cost compared to Elasticsearch.

No UI change. No workflow change. No retraining required.

Summary

The transition from ELK to VLK solves the biggest pain point of observability: Cost. VLK delivers the best of both worlds:

- None of Elasticsearch’s cost drawbacks, 80% cost reduction with better performance

- All the strengths of ELK — powerful search, intuitive dashboards, flexible pipelines and more

- Seamless migration with little effort

ELK made observability mainstream. VLK makes observability affordable again.

You can join the waiting list to get the es2doris proxy and detailed migration guide.