The Credit Card Center in this commercial bank generates over 14 billion log entries (~80 TB) per day, with a total archived volume exceeding 40 PB. Their early log platform built on Elasticsearch faced challenges including:

- High storage cost

- Low real-time write performance

- Slow text search

- Inadequate log analysis capabilities

By replacing Elasticsearch with Apache Doris, they have saved 50% of resources while improving query speed by 2-4× and enjoying much simpler operations and maintenance.

Logs are super helpful, but a pain to handle

Logs record diverse data and information that help understand system status. However, dealing with TB-scale daily logs at the Credit Card Center brings headaches:

- Heterogeneous formats: Logs are semi-structured free text, rich in information but difficult to analyze uniformly.

- Diverse analytics requirements: There are numerous log types and diverse analysis needs across their business lines, databases, and middleware.

- Low maintenance efficiency: Whenever there's an incident, engineers must log into each server to view.

- Lack in support for in-depth analysis: It is hard to correlate event logs across hardware and software to gauge business impact or perform correlation analysis across historical data.

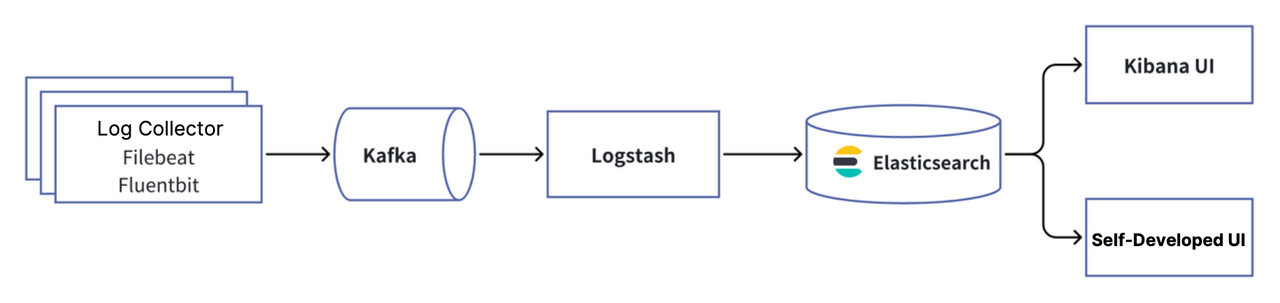

Early Elasticsearch-based log platform

Previously, the data team in the bank built a log platform using the ELK stack to store and analyze logs from applications components, middlewares, and databases. Logs were collected via Filebeat to Kafka, processed by Logstash, and stored in Elasticsearch. Developers and engineers conducted queries via the Kibana UI and a custom UI.

However, the pain points were becoming increasingly unbearable:

- High storage cost: Elasticsearch stored data in multiple formats, including forward indexes, inverted indexes, and columnar storage, which also multiplied the costs.

- Low real-time write throughput: As data grew, Elasticsearch struggled to ingest data at

GB/srates or handle millions of events per second. - Limited analytic capabilities: Elasticsearch was limited to single-table queries and did not support JOINs, subqueries, or views, which restricted its ability to handle complex log analytics.

Comparing Apache Doris and Elasticsearch

As the user explored alternatives to Elasticsearch, they found that Apache Doris stood out with the following key advantages, so they decided to start testing it.

- High-throughput, low-latency writes: Apache Doris supports sustained TB/day and GB/s writes with less than 1 s latency.

- Low-coststorage: Public benchmark results show that Apache Doris reduces storage costs by 60–80% compared to Elasticsearch. By offloading cold data to S3 or HDFS, users can cut costs by an additional 50%.

- Fast full-text search: Apache Doris supports inverted indexes and full-text search, enabling sub-second response times for common log analytics queries such as keyword-based log inspection and trend analysis.

- Log analytics capabilities: Apache Doris supports complex log data processing and analysis, including keyword search, aggregation, multi-table JOINs, subqueries, UDFs, logical and materialized views.

- Open ecosystem: It integrates with popular log sources via HTTP APIs, and visualization tools using standard MySQL protocol and syntax.

- Easy cluster management: It offers distributed cluster management with support for online scaling and upgrades, so it doesn't require service downtime during cluster expansion or version upgrades.

To compare Apache Doris and Elasticsearch for their use caes, the user conducted their own tests with httplogs dataset and their production log data. The results showed that, under the same data volume, Apache Doris outperformed Elasticsearch in all key metrics:

- 58% less disk usage

- 32% higher peak write throughput

- 38% lower query latency

Moreover, Elasticsearch ran on 9 servers (16 cores, 32 GB each), while Apache Doris only used 4 servers (8 cores, 32 GB each). That means Apache Doris only consumed a quarter of the CPU resources.

New Apache Doris-based log platform

Based on the above results, the Credit Card Center decided to upgrade its architecture by replacing Elasticsearch with Apache Doris. Leveraging Apache Doris, they have built a unified platform that supports end-to-end log management (including collection, cleansing, computation, storage, search, monitoring, and analysis), all in one place.

At the same time, they have replaced the Kibana UI with the VeloDB UI, an Apache Doris-native interface that better aligns with the center’s business needs and operational workflows.

With the Apache Doris-based solution, they now enjoy the convenience of:

- A unified log query interface across clusters and data centers

- End-to-end trace analysis with TraceID correlation between logs and metrics

- Schema anomaly detection for logs with real-time alerts

- Various performance optimizations at the system and storage levels (e.g., dynamic partitioning, tiered data storage, efficient data compression and indexing strategies) to enhance query and write efficiency

Wrap up with figures

After the migration, the Credit Card Center measured the performance gains and cost reductions.

Take one of their data centers as an example:

After launching a log storage and analytics platform built on Apache Doris, the team achieved big improvements over the legacy Elasticsearch-based architecture:

- 50% resource savings: CPU usage dropped to around 50%, and overall resource consumption was cut in half. For the same volume of data, Elasticsearch required 10 TB of storage, while Apache Doris only needed 4 TB—thanks to efficient ZSTD compression.

- 2-4× faster queries: The new architecture delivered 2-4× query performance gains while using less CPU.

- Enhanced observability: By integrating logs across tracing, metrics, and alerting systems, Apache Doris has brought more powerful capabilities such as log pattern recognition, classification & aggregation, log convergence, and anomaly detection.

- Improved efficiency in operation and maintenance: The new platform offers lightweight installation, user-friendly management tools, and simpler service setup, configuration, monitoring, and alerting.

They now plan to roll out Apache Doris across their remaining data centers, and expand it to more use cases to build a data lakehouse.

Talk to us

If you want to bring similar (or even higher) performance improvements and benefits to your data platform, or just explore further on Apache Doris, you are more than welcome to join the Apache Doris community, where you can connect with other users facing similar challenges and get access to professional technical advice and support.

If you're exploring fully-managed, cloud-native options, you can reach out to the VeloDB team!