In 2025, AI is entering the agentic AI era. AI no longer just anwers prompts, it's evolving into autonous agents that can think, act, design workflows, and complete complex tasks. To do all that, AI agents need constant access to fresh, relevant data.

But traditional databases can't keep up, they weren't built for the scale, speed, or diversity of data agentic AI demands. Meeting the data needs of AI agents requires a new kind of analytical database.

Apache Doris is built to meet the data challenges in the agentic AI era, delivering real-time analytics at scale. But to use Doris's power for AI agents, you need a bridge between them. That's where Doris MCP Server comes in, acting as a communication layer between AI agents and Doris.

In this article, we'll explore how agentic AI is rewriting the rules for analytics, how MCP connects AI agents to data sources, and walk through two demos that bring AI agents, MCP, and Apache Doris together in action.

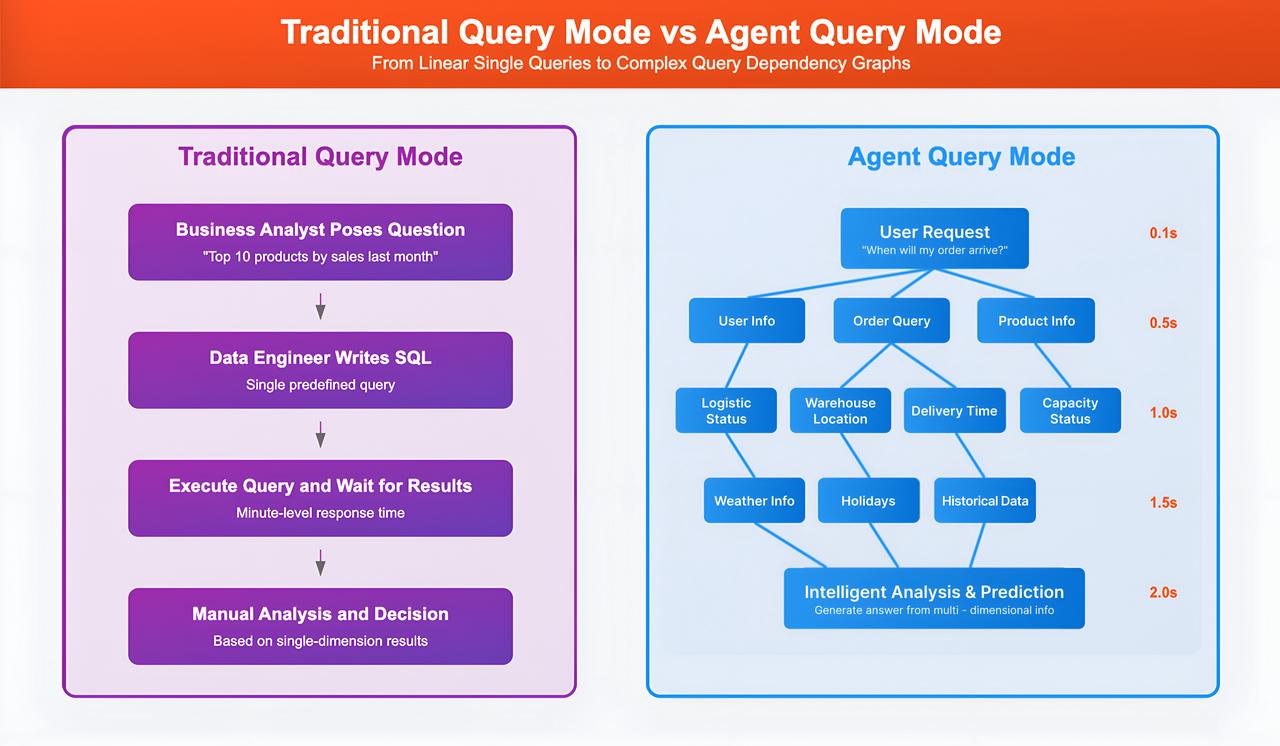

How AI Agents Work Differently and What They Demand from Analytical Databases

AI agents are changing data analytics from being user-facing to agent-facing. In other words, the primary consumers of data are no longer just humans—but both humans and AI agents. As the number of AI agents continue to grow, they will bring three major challenges for databases:

- High concurrency and frequent access: Data systems need to handle thousands or even tens of thousands of concurrent queries from agents, and process data in gigabytes to terabytes and larger scale.

- Real-time performance: Agents are expecting responses within seconds, which traditional batch processing can't deliver.

- Diverse data types: Agents need to work with all kinds of data — structured, semi-structured, and unstructured, including text, audio, and video. Databases must be flexible enough to support this diversity.

After years of development, Apache Doris is well-positioned to meet these challenges. Doris is built to meet the real-time, high-concurrency, and the diverse data needs of AI agents, with an architecture that support access data from multiple sources and new innovations like query optimization and vector search.

Read below to learn how Apache Doris supports AI agents in detail:

1. Handling High Concurrency

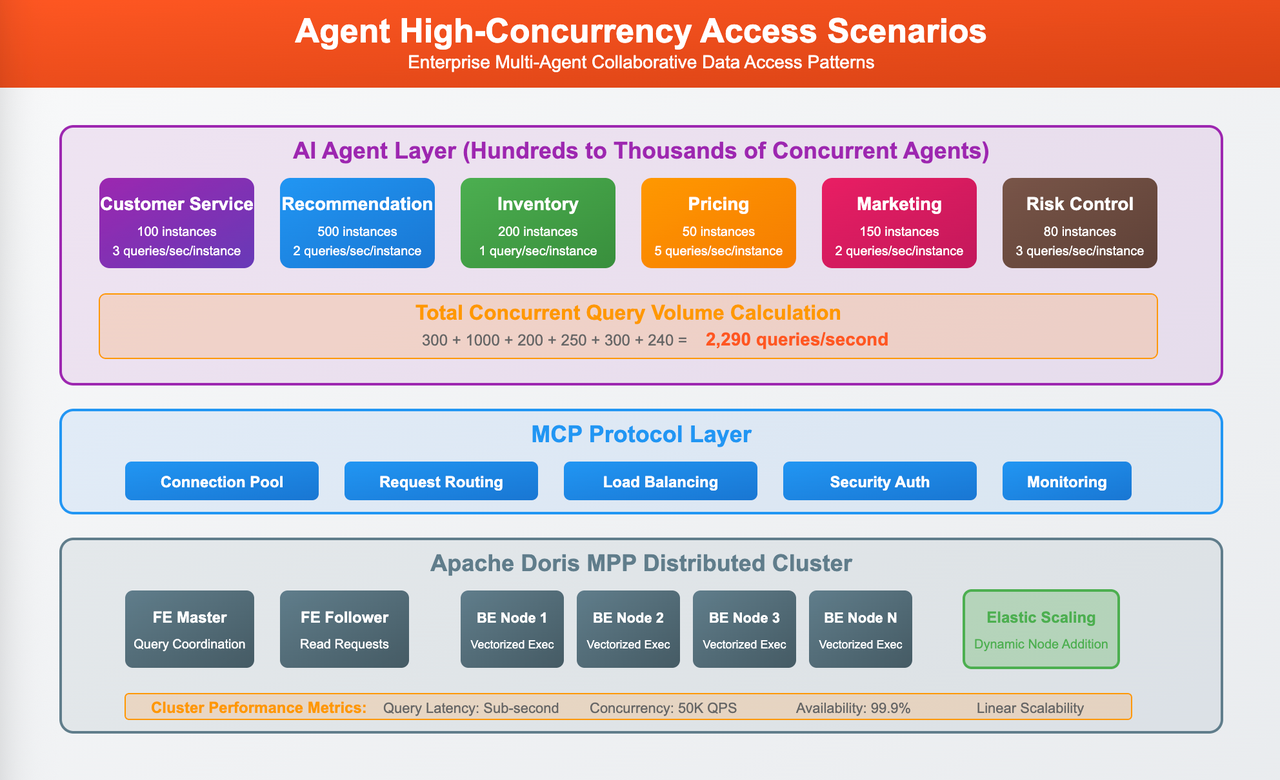

Let's walk through an example of agentic AI. A typical e-commerce platform might run hundreds of agents, handling tasks such as customer service, product recommendations, inventory management, pricing, marketing, and risk control. These agents constantly query databases, far more frequently and with greater complexity than traditional human-application interactions. Mid-sized companies may need to handle thousands or even tens of thousands of concurrent queries from AI agents, while large enterprises could see agent numbers and query volumes grow exponentially.

Apache Doris's MPP (Massively Parallel Processing) architecture offers distinct advantages in these high-concurrency scenarios. Unlike traditional master-slave designs, Doris uses a masterless, distributed design where each backend (BE) node can independently process query requests, avoiding single-point bottlenecks. When dealing with high-concurrency access from agents, Doris can scale query performance linearly simply by adding more BE nodes.

Apache Doris also offers vectorized execution engine, which takes full advantage of modern CPUs' SIMD (Single Instruction, Multiple Data) capabilities. This allows Doris to efficiently process large volumes of agent-generated queries involving aggregation and filtering, boosting performance by 5 to 10 times.

2. Meeting Real-Time Demands

Agent-based applications demand an unprecedented level of real-time data access. While traditional BI reports can tolerate data delays of hours or even days, agents need to make instant decisions based on the latest data. For example, a risk control agent must analyze a user's live transaction behavior, credit history, and current account status within seconds to assess potential fraud risks. Any data delay can lead to misjudgements.

This is where Apache Doris shines—with real-time processing and high-concurrency primary key point queries capabilities. Leveraging built-in features and ecosystem integrations, Doris achieves sub-second data ingestion latency, ensuring agents always access the latest business data. Doris's majority write rules also guarantee consistency between query and write operations, avoiding common read-write conflicts found in traditional data warehouses.

Combined with Doris's hybrid row-column storage and partial column update capabilities, it excels in primary key point queries, such as real-time user profiling and risk control scenarios, offering AI agents millisecond-level response at massive scales.

3. Smarter Query Optimization

Queries generated by AI agents are often highly dynamic and unpredictable. Unlike traditional, predefined reports, agent queries are generated on the fly—based on user requests and contextual information—making conventional query optimization methods less effective. Agents may produce seemingly "odd" query combinations or perform multiple, multidimensional analyses on the same dataset within a short period.

Apache Doris's cost-based optimizer (CBO) is built to handle these challenges. Doris can dynamically generates the most efficient execution plan based on actual data distribution and query patterns. Doris also supports multiple index types and can automatically select the most suitable indexing strategies for agent queries, improving query performance by 5-10x.

4. Accessing Diverse Data Sources: Federated Query

In traditional data analytics workflows, data is typically pre-modeled and stored in a centralized data warehouse, where human analysts retrieve it based on specific business needs. But AI agents require access to a much broader range of data sources—including real-time operational data, historical data from data lakes, and external APIs. Creating separate connectors for each data source not only increases system complexity but also limits the agent's ability to make intelligent, timely decisions.

AI agents need a more flexible and unified data access approach. Apache Doris offers federated queries, unifying data acess cross data sources through its Multi-Catalog feature. With a unified SQL interface in Doris, agents can seamlessly access data from traditional databases like MySQL, PostgreSQL, and Oracle; data lake formats such as Iceberg, Hive, Hudi, and Paimon; as well as cloud storage like S3 and HDFS. This gives agents a unified view of the data landscape, enabling more accurate and holistic analysis.

Doris also provides a query optimizer that can identify cross-source queries and automate the best execution strategies. The query optimizer will automatically determine which tasks to push down to source systems and which to execute within Doris, maximizing overall query efficiency.

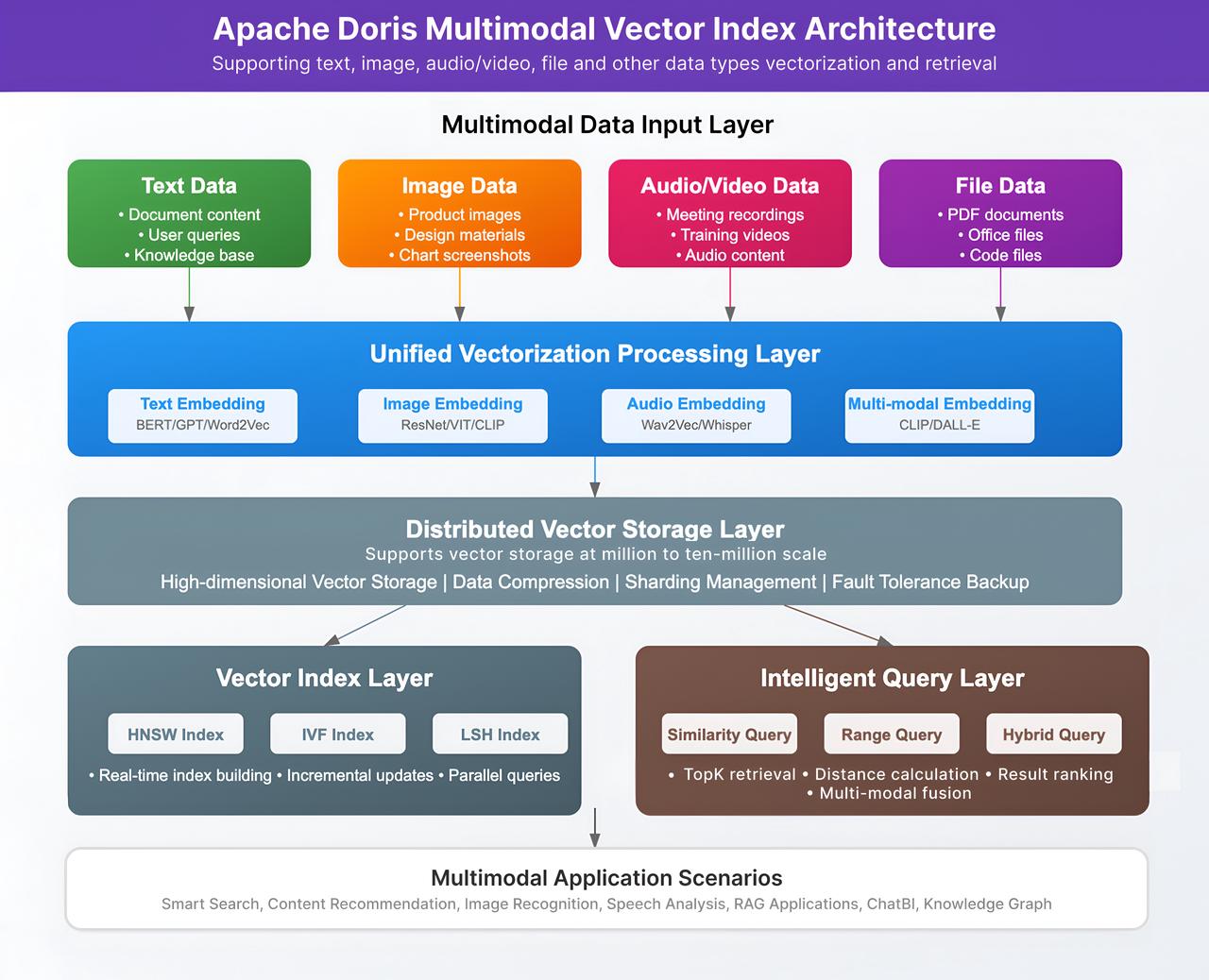

5. Multimodal Data: Vector Search

As AI agents become more advanced, especially those powered by large language models and techniques like Retrieval-Augmented Generation (RAG), they increasingly need to search and reason over unstructured data—such as text, images, and audio. This requires vector search, which allows agents to find semantically similar content based on meaning rather than exact matches. Traditionally, this meant deploying a separate vector database, which brings challenges like data inconsistency, complex queries, and added operational cost.

Apache Doris will introduce native support for vector data types and vector indexes in the upcoming Apache Doris 4.0 in 2025—enabling agents to seamlessly combine structured and vector data in a single query for true multimodal analysis.

6. Security

The autonomy and high-frequency access patterns of AI agents introduce unique security challenges. Unlike traditional data access, which often involves human oversight, agent decisions are automated—requiring the underlying data system to be secure, auditable, and resilient by design.

Apache Doris addresses this with a layered security framework:

- Fine-grained access controls define exactly what data each agent can access and what operations it's allowed to perform.

- Detailed audit logs capture every access event from agents, enabling quick detection of suspicious behavior.

- Built-in protections like SQL injection prevention and query complexity limits help safeguard system stability from misbehaving or malicious agents.

- Data encryption at rest and in transit, ensuring sensitive information remains secure during storage and transmission.

7. Observability

As more people use AI and more companies building AI agents to better operations, AI companies are seeing exponential growth and increasing pressure on their underlying data infrastructure. That's when observability becomes mission-critical.

A modern data platform like Apache Doris to help AI companies scale their observability systems to keep up with the surging demand. With real-time ingestion, fast queries, high-concurrency, and lower costs, Doris can help AI companies monitor the exploding volumes of metrics, traces, logs, and events—giving them the ability to catch and fix backend issues in real time.

What's Model Context Protocol (MCP)

Model Context Protocol (MCP) is a protocol that standardizes how AI systems connect with external data, services, and tools. It was released by Anthropic in November 2024, and quickly took off and become one of the most popular underlying tech in AI.

MCP is often described as a "USB-C port for AI applications". And just as USB-C standardizes how devices connects to accessories, MCP standardizes how AI connects to different databases, data sources, and others. Basically, MCP allows AI agents and LLMs to enjoy consistent interactions when dealing with various tasks, such as read local files, query remote databases, or streaming web data, no matter how different the sources are behind the scenes.

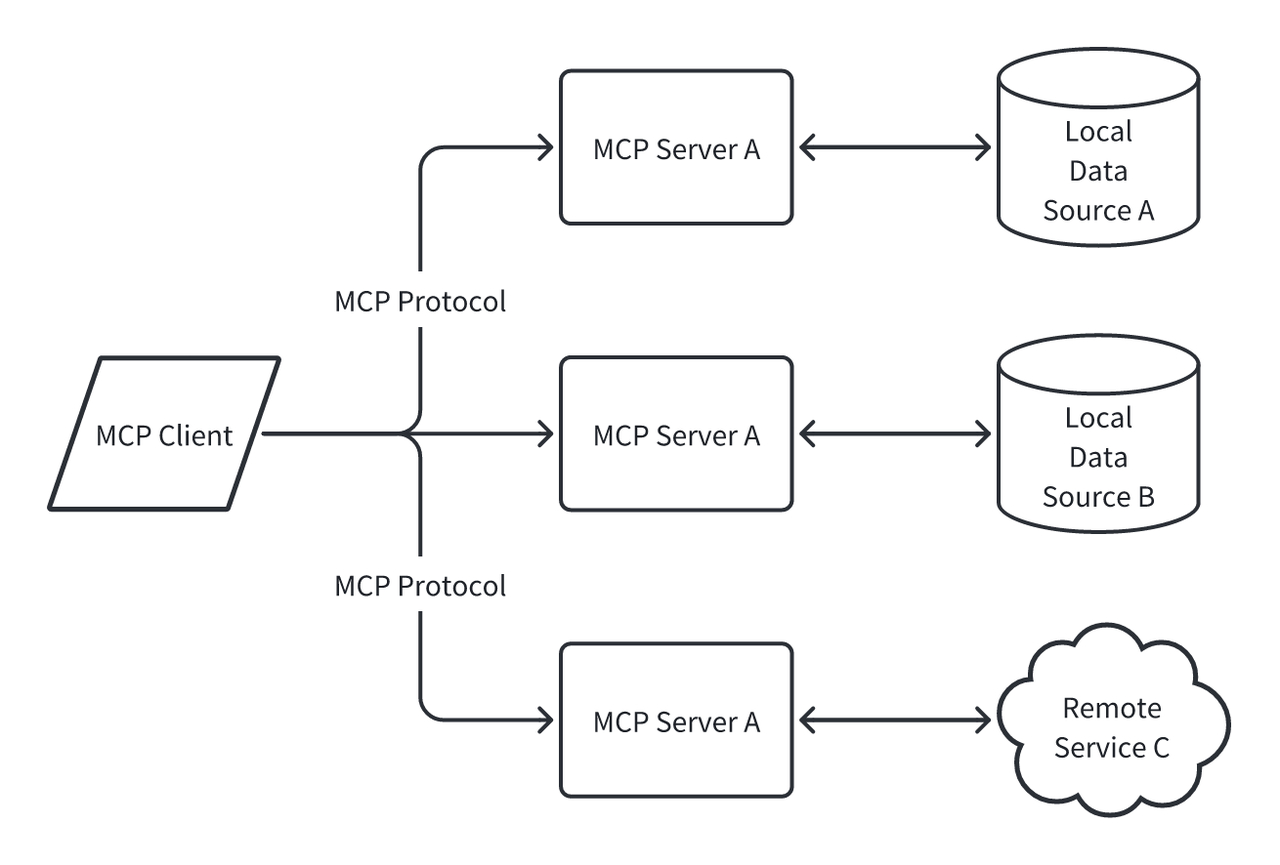

MCP adopts a Client-Server architecture, with three core concepts:

- MCP Client: A client program used to access the MCP Server, such as Cursor, Claude Desktop, IDEs, etc.

- MCP Server: A lightweight program that provides specific functional interfaces through the standardized MCP protocol.

- Local Data Sources & Remote Services: Resources that can be accessed by the MCP Server, such as local files, web links, remote services, and so on.

MCP is built on top of the JSON-RPC 2.0 protocol. JSON-RPC 2.0 provides the structured, JSON-formatted message layer that defines how requests, responses, and notifications are exchanged between MCP clients and servers.

Moreover, MCP offers strong security features. It includes built-in mechanisms for identity verification and access control, supporting popular methods like API keys, OAuth 2.0, and JWT. With fine-grained permission controls, developers can precisely define which resources an AI agent can access and what actions it can perform. This ensures data security for enterprise-grade AI applications.

MCP is also deeply optimized for performance. It supports connection multiplexing and reuse, batch operations, and stream processing — good for AI applications that demand high throughput and low latency.

Apache Doris MCP Server: Connecting Agents and LLMs with Doris

Apache Doris MCP Server provides AI access to Doris. Through the Doris MCP Server, AI, LLMs, and agents can access and conduct analysis of the data stored in Doris.

To better understand how the Doris MCP Server addresses real-world needs, let's first look at the core data access demands of different AI agent use cases.

1. Understanding Different Agent Use Cases and MCP Requirements

Different types of agents have different demands when accessing data. By analyzing popular agent use cases, we can identify common patterns and core technical challenges that MCP must address. These insights provide guidance for technical implementation.

| Agent Type | Primary Function | Core Characteristics | Performance Requirements | Data Access Characteristics | MCP Protocol Requirements |

|---|---|---|---|---|---|

| ChatBI Agent | Natural language data analytics |

|

|

|

|

| Intelligent Customer Service Agent | Real-time customer service support |

|

|

|

|

| Recommendation System Agent | Personalized recommendation engine |

|

|

|

|

| Risk Control System Agent | Real-time risk prevention |

|

|

|

|

| Knowledge Base Agent | Document retrieval and knowledge Q&A |

|

|

|

|

2. Technical Implementation of the Doris MCP Server

The technical design of the Doris MCP Server reflects a deep understanding of AI-era data access needs. Built with Python and FastAPI, the Server ensures both rapid development, code quality, and strong compatibility with modern AI tech stacks.

A. Multi-Mode Protocol Support

Doris MCP Servers adopts a multi-mode design to support different protocol transports, accommodating different deployment scenarios and application requirements.

- Server-Sent Events mode provides real-time bidirectional communication capabilities through HTTP protocol, ideal for web application integrations. This mode leverages existing HTTP infrastructure, simplifying deployment and reduce operational overhead.

- Streamable HTTP mode is specifically optimized for large-scale queries. This mode supports streaming data transmission, efficiently handling query results at the gigabyte or even terabye scale. This mode is currently under testing and is expected to be released in the next version.

- Stdio mode, as a standard input/output interface, provides best compatibility for development tools integration, and modern IDEs like Cursor.

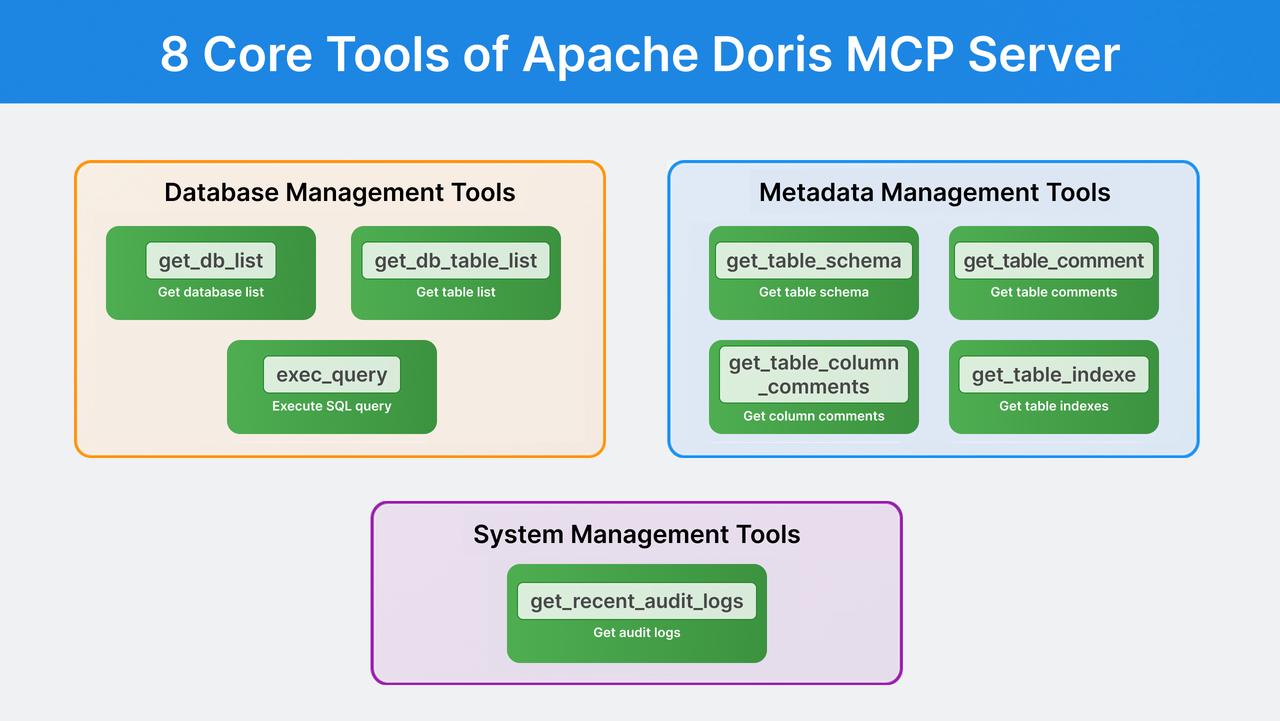

B. Core Tools of Doris MCP Server, a Systematic Design

Doris MCP's tool collection adopts systematic design thinking, supporting the full lifecycle of database interaction for AI applications:

exec_queryserves as the core SQL execution engine, with built-in features like security checks, parameter validation, result optimization, and others.- Metadata-related tools like

get_table_schemaandget_table_column_commentshelp agents understand the structure of the data. - Auditing tools like

get_recent_audit_logsensure enterprises follow compliance requirements.

C. Optimization for Database Interaction

At the database interaction layer, Doris MCP Server introduces several performance and stability optimization:

- Smart connection pool management: Dynamically adjusts the number of connections based on load condition, ensuring performance without wasting resources.

- Query execution optimization: Includes automatic SQL safety checks, intelligent LIMIT addition, and optimized result serialization for faster responses under high concurrency.

- Query timeout and resource controls: Prevent excessive resource usage and improve system robustness in demanding scenarios.

D. Federated Query: Unified Access Across Data Sources

Thanks to the Multi-Catalog feature in Apache Doris, the Doris MCP Server have unified access across multiple data sources—breaking the limitations of single data source and giving AI agents a broader and more integrated data view.

Currently supported sources include mainstream relational databases (MySQL, PostgreSQL, Oracle, SQL Server), big data ecosystems (Iceberg, Hive, Hudi, Paimon), and cloud storage (S3, HDFS, MinIO, OSS). This wide data source support empowers AI applications to integrate and analyze data from most popular systems, all through the single interface of Doris MCP Server.

Apache Doris MCP Server Demos

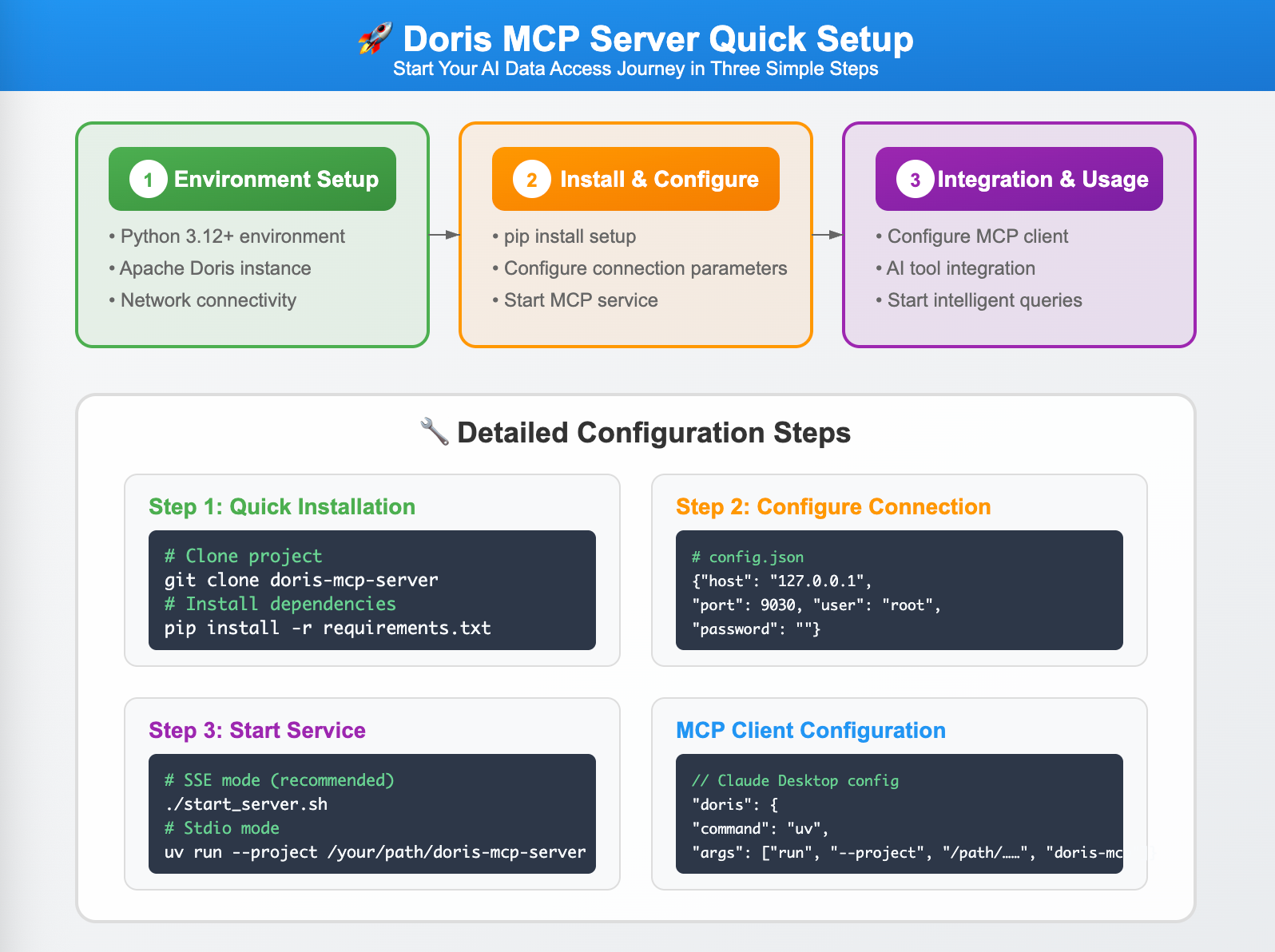

Get Apache Doris MCP Server: https://github.com/apache/doris-mcp-server

Doris MCP Server is designed with a plug-and-play philosophy, deployment is fast and straightforward. First, start the Doris cluster using Docker, then configure connection parameters and security options through a config file. Finally, start the MCP Server. The whole process typically takes about 10 minutes.

Performance wise, we have validated Doris MCP Server through a series of benchmark tests.

- In query latency, simple queries average response times is within seconds, while complex aggregation queries are also controlled at the second level, ensuring AI applications can provide smooth user experiences.

- In concurrency capabilities, a single MCP Server instance can simultaneously handle hundreds of concurrent query requests, while cluster deployment can easily scale to handle thousands of concurrent requests.

Demo 1: Using Doris MCP Server and Dify to Build ChatBI

This demo uses the Server-Sent Events transport mechanisms in the MCP protocol.

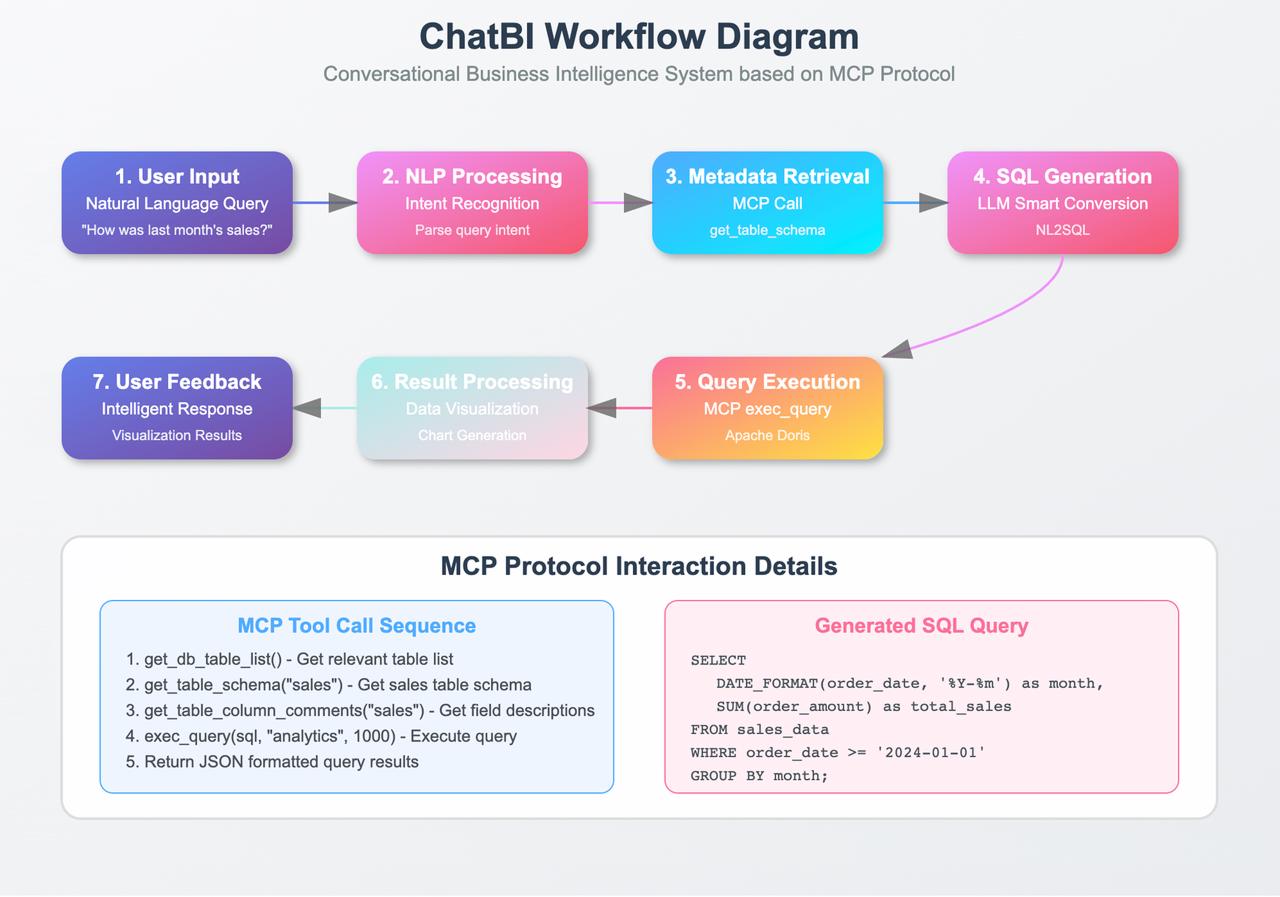

In this demo, we are using Doris MCP Server and Dify (a low-code AI app builder) to build a ChatBI application. ChatBI is a popular agentic AI application that let users analyze data and generate business insights using natural language (typically through an AI chat interface) rather than writing complex queries or using traditional dashboards.

In the technical architecture of ChatBI, the Doris MCP Server acts as a bridge between the AI agent (ChatBI) and the database (Apache Doris). When a user asks a data analytical question in the chat interface, ChatBI first interprets the query intent using natural language processing. It then interacts with Doris through Doris MCP Server's core tools, in several steps:

- It calls

get_db_table_listto retrieve relevant table names - Uses

get_table_schemato understand the data structures of those tables - Generates the appropriate SQL query based on that information

- And finally executes the query via

exec_queryand returns the results to the user

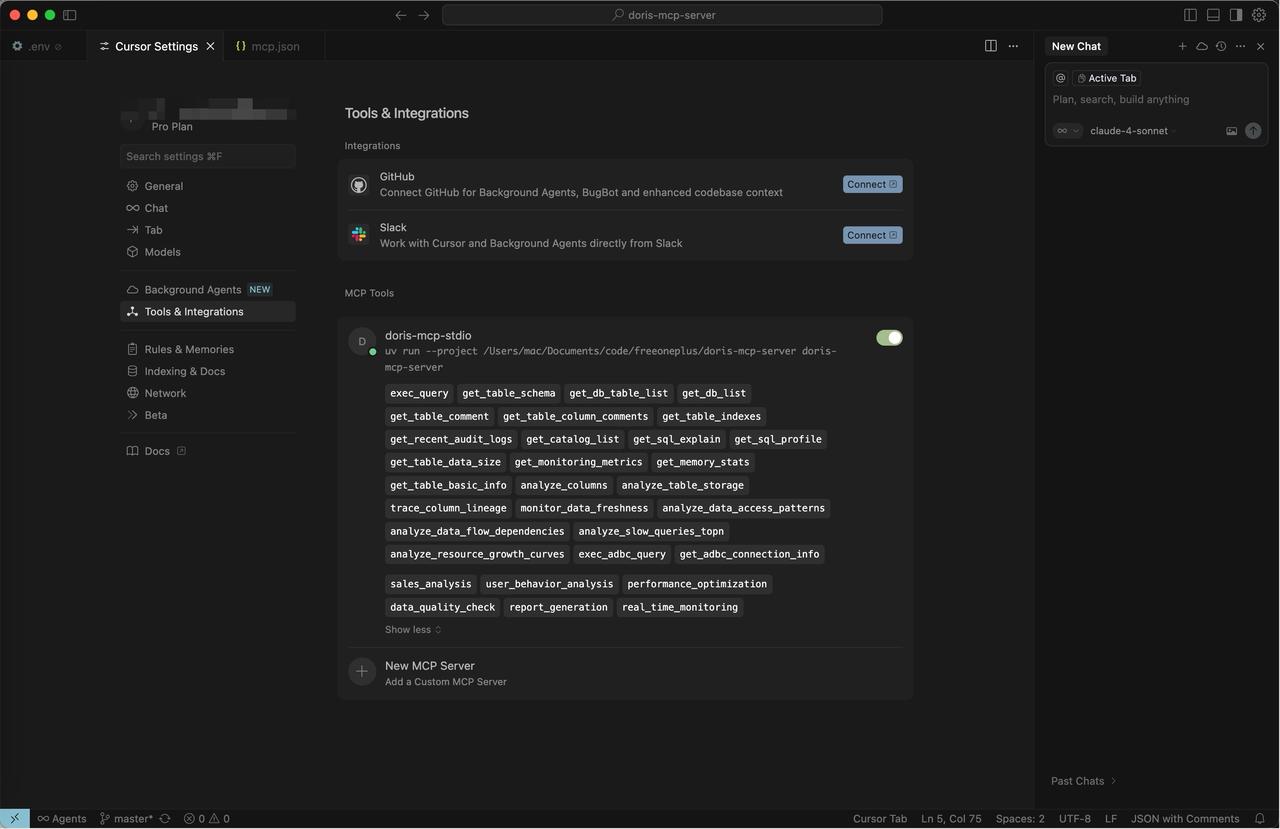

Demo 2: Using Doris MCP Server in Cursor for Development

This demo uses the Stdio transport mechanisms in the MCP protocol.

In this demo, we are completing similar BI, data processing, and analytic tasks as in the previous demo, but in the Cursor environment.

Cursor is a popular AI-powered code editor with built-in support for the MCP protocol. It can act as an MCP client (in the HOST role) and communicate with various MCP servers. Cursor supports multiple MCP transport mechanisms, including Stdio (standard input/output) and Server-Sent Events.

Stdio is the preferred option in this case thanks to its ultra-low latency and minimal resource usage, making it ideal for frequent interactions common in development.

To run this demo:

- Add the Doris MCP Server in Cursor's MCP Servers settings and complete the configuration.

- Use the AI chat tab in Cursor to query the database with the natural language, no need to write SQL.

This setup allows developers to perform data queries and analysis directly within the code editor. It boosts productivity by eliminating context-switching, especially in tasks like inspecting table, analyzing data distribution, or validating business logic.

Conclusion

Agentic AI is reshaping the data analytics landscape, demanding data systems that are fast, scalable, and flexible. Apache Doris offers capabilities that help companies meet the AI agents demand for data: with real-time analytics, handling high-concurrency, providing observability for large-scale data volume, and supporting multimodal data through vector search.

With the Doris MCP Server, companies can plug AI agents, LLMs, and other systems directly into Doris—unlocking the full power of their data. It's not just about keeping up with AI. It's about staying ahead. Apache Doris and Doris MCP Server can help you get ahead of competitors.

If you want to explore further how to use Apache Doris and Doris MCP Server for your data platform, or just explore further on Apache Doris, you are more than welcome to join the Apache Doris community on Slack, where you can connect with other users facing similar challenges and get access to professional technical advice and support.

If you're exploring fully-managed, cloud-native options, you can reach out to the VeloDB team!